Fine-grained sketch-based image retrieval by matching deformable part models

Abstract

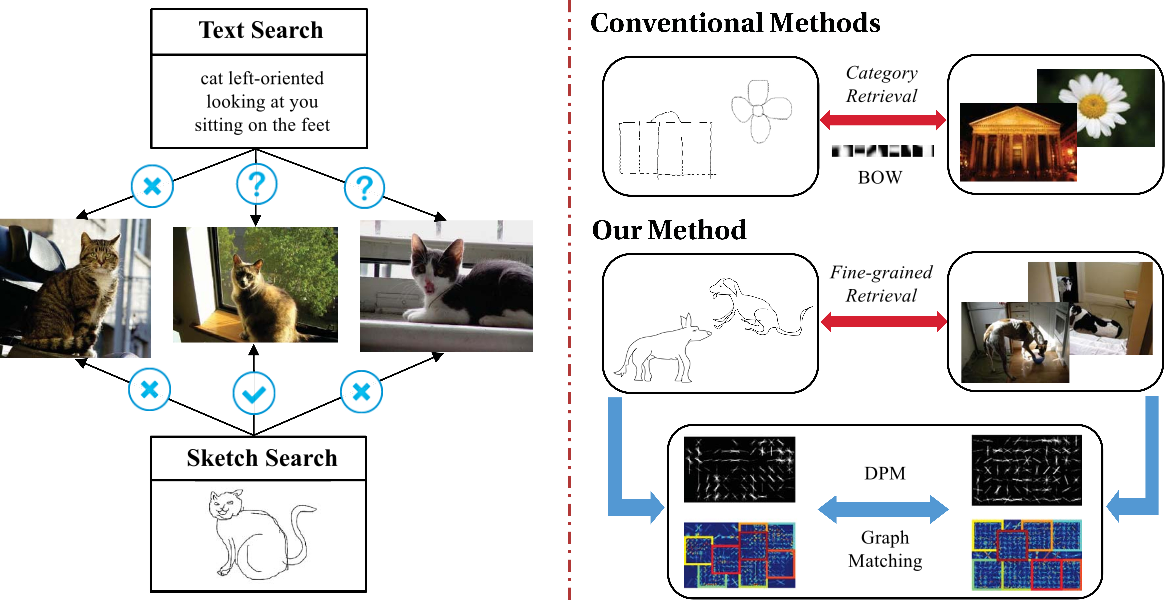

An important characteristic of sketches, compared with text, rests with their ability to intrinsically capture object appearance and structure. Nonetheless, akin to traditional text-based image retrieval, conventional sketch-based image retrieval (SBIR) principally focuses on retrieving images of the same category, neglecting the fine-grained characteristics of sketches. In this paper, we advocate the expressiveness of sketches and examine their efficacy under a novel fine-grained SBIR framework. In particular, we study how sketches enable fine-grained retrieval within object categories. Key to this problem is introducing a mid-level sketch representation that not only captures object pose, but also possesses the ability to traverse sketch and image domains. Specifically, we learn deformable part-based model (DPM) as a mid-level representation to discover and encode the various poses in sketch and image domains independently, after which graph matching is performed on DPMs to establish pose correspondences across the two domains. We further propose an SBIR dataset that covers the unique aspects of fine-grained SBIR. Through in-depth experiments, we demonstrate the superior performance of our SBIR framework, and showcase its unique ability in fine-grained retrieval.Dataset

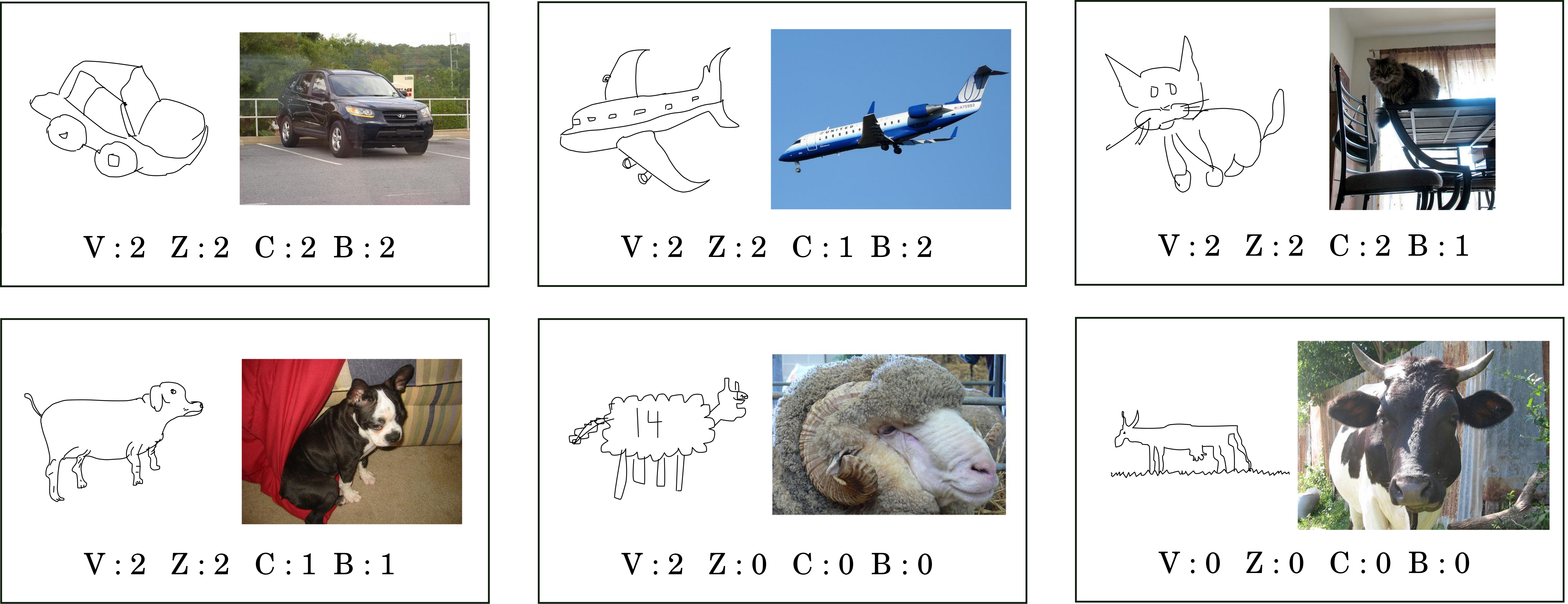

We have manually annotated a cross-domain dataset that contains sketch-image pairwise similarity annotations. Specifically, 6 sketches and 60 images are selected from TU-Berlin sketch dataset and PASCAL VOC image dataset respectively for each category (14 categories in total), and sketch-image pairs have their similarities manually annotated. For each sketch-image pair (14 × 6 × 60 = 5, 040 pairs in total), we score its similarity in terms of four independent criteria: (i) viewpoint (V), e.g., left or right, (ii) zoom (Z), e.g., head only or whole body; (iii) configuration (C), e.g., position and shape of the limbs; (iv) body feature (B), e.g., fat or thin. For each criterion, we annotate (5, 040 × 4 = 20, 160 annotations in total) three levels of similarity: 0 for not similar, 1 for similar and 2 for very similar. Above are some examples of sketch-image pair similarity annotations.

Download

[Paper] [Bibtex][Code&Testing dataset]

Results

Airplane

Horse

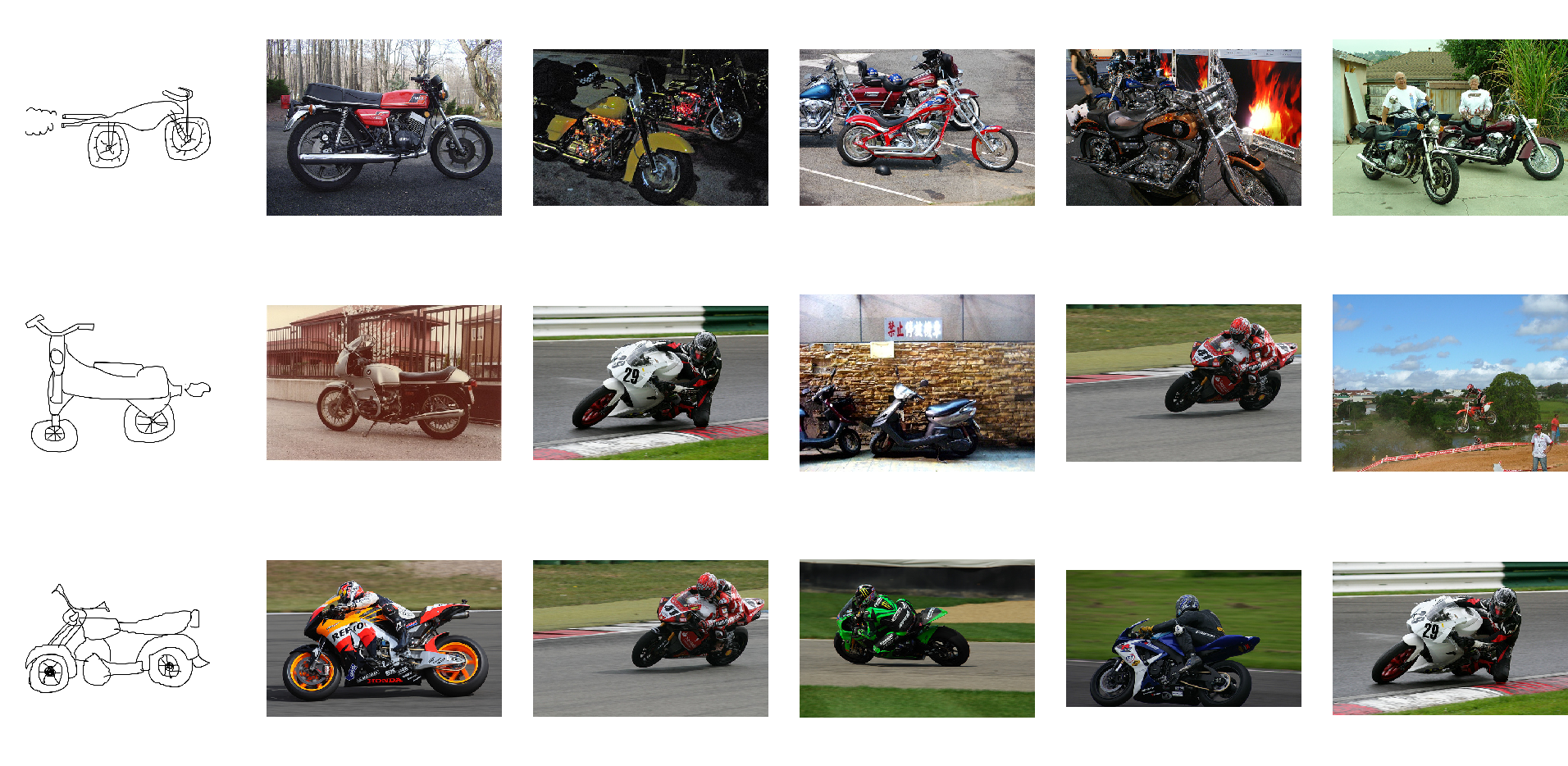

Motorbike